Welcome to Detection at Scale, a weekly newsletter for SecOps practitioners covering detection engineering, cloud infrastructure, the latest vulns/breaches, and more. We are back after the madness of the RSA and BSides conferences. Enjoy!

Unsurprisingly, RSA Conference 2024 was brimming with security companies championing AI. In Detection and Response tooling, new categories of features are emerging to simplify the tasks of SecOps teams, including automated triage, chatbots, and log summarization, to name a few. This post will explore these nascent categories, explain their role in enhancing team performance, and speculate future direction.

We will not cover AI security copilots like Charlotte AI from CrowdStrike and Security Copilot from Microsoft.

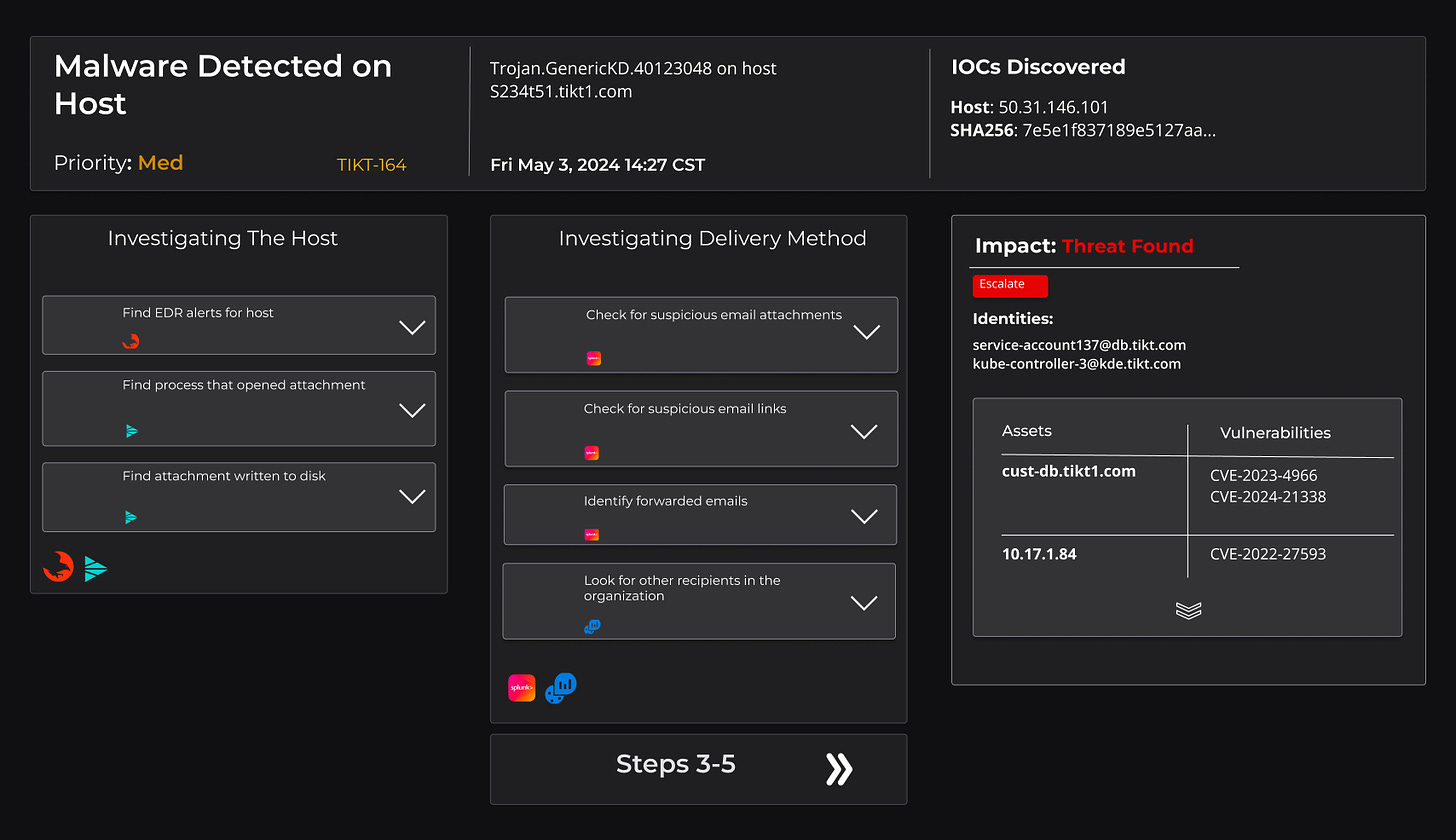

Automated Tier 1 Triage

If SOARs were a response to loud SIEMs, then “Autonomous SOC Analysts” would be their successors.

The idea behind an autonomous SOC analyst is to perform automated end-to-end alert triage and analysis. This works by receiving the alert from the SIEM, data-gathering organizational context through a series of API calls, answering a series of follow-up questions about an alert, and providing a recommendation and report of evidence for human consumption and next steps. For the autonomous agent to succeed, it relies heavily on connections to SIEMs, EDRs, IdPs, and other internal systems for contextualization, similar to SOAR.

Several startups and products are popping up in the market to begin solving this problem, with founders with backgrounds in AI, ML, and SecOps.

Alert Fatigue is likely the most commonly used buzzword in SIEM and the prime target for autonomous SOC analysts. However, there have been many attempts at curbing this symptom. Many SIEM deployments take an unintentional approach to writing and deploying rules, and that’s caused a major source of fatigue. Focusing less on 100% MITRE coverage and more on working backward from a clearly defined threat model can begin to reduce the barrage of alerts, reducing waste from chasing down alerts that don’t actually matter for your organization.

A few autonomous SOC analyst products have been marketing the downfall or replacement of the “Tier 1” job, but we believe they will make Tier 1 analysts much better at what they do, freeing them up to learn a new programming language (like Python) or diving into the world of GenAI to optimize the outcomes with these tools.

There is no replacement for human intuition in a highly contextual task like IR, but we can certainly welcome tools that remove repetitive tasks!

Autonomous SOC analysts won’t solve the alert fatigue problem alone, but they can automate the rote tasks more easily! With the right data, they can also reason about the internal playbooks relevant to your threat model and recommend a next step for humans.

These products can replace SOAR products if they are predictable, reliable, and cost-efficient enough. However, SOAR products are well-positioned to enter the arena here due to their inherent customer base, existing automation, and numerous ecosystem integrations, which will need to be rebuilt by these autonomous SOC analyst platforms.

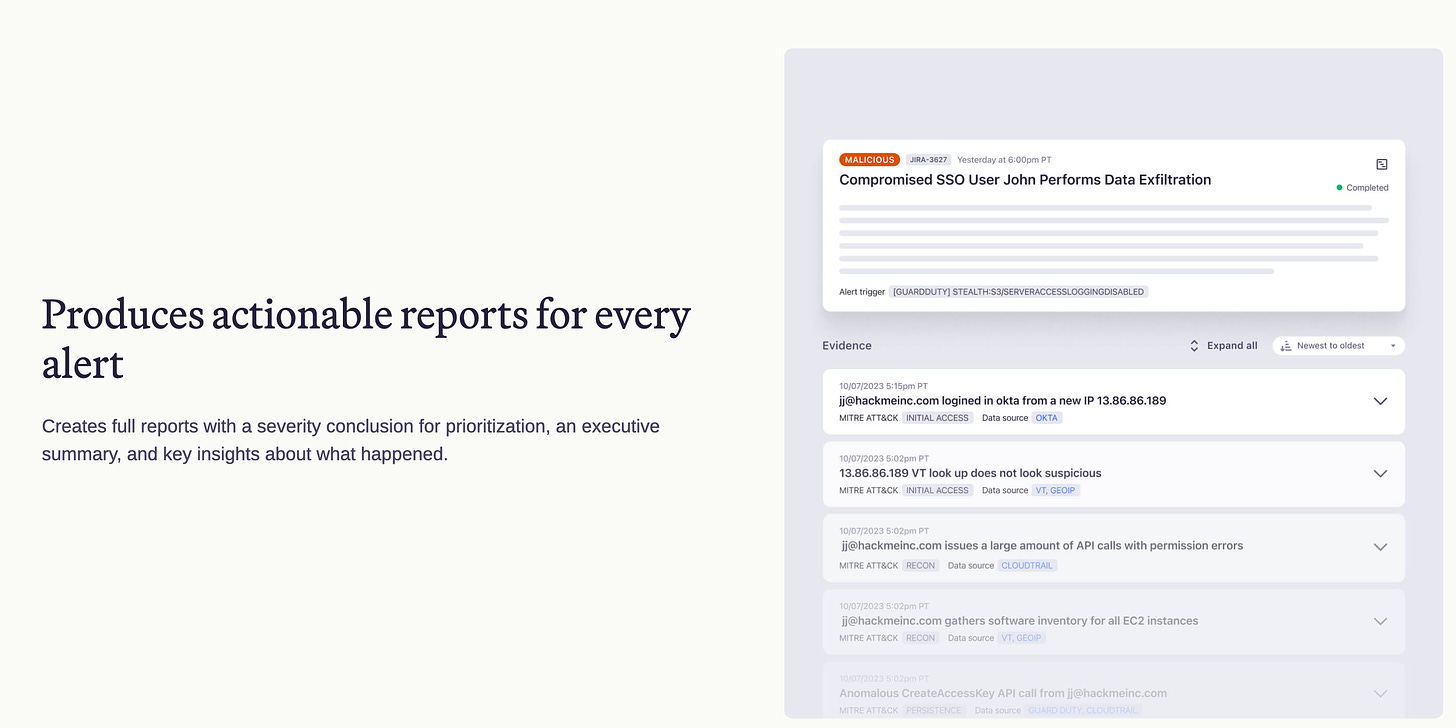

Keeping Humans in the Loop with AI Chatbots

In the theme of automating rote IR tasks, GenAI also swings the door open to automating “organizational Q&A” for security teams.

In SecOps, distinguishing legitimate behavior from an attacker is often very challenging, so a simple technique for improving alert fidelity is asking the human, “Did you do this?” While their Slack access could certainly be compromised, there are options for implementing a second factor into the loop to ensure the responses are legitimate.

An example is a rule monitoring for logins to a production system from a foreign country where that organization employs no people. While the best bet is to use as much situational information as we can access (maybe gathered by our autonomous SOC analyst), simply asking can add to the list of evidence, and their recorded responses can provide a great audit trail.

In 2017, Dropbox released securitybot with the following premise:

Ignoring alerts is tempting. After all, for every alert that involves a person, a member of the security team needs to manually reach out to them. More alerts means more work: we all know that Chris runs

nmapabout six times a day, and the SREs need to runsudofairly often. So we can just ignore those alerts, right? Wrong. This sets a dangerous precedent that never ends well. There’s a clear need for a system that can reduce the burden of alerts for the security team.

AI evolves these chatbots from hard-coded scripts to fluent conversations, and who is better to carry on the legacy than OpenAI themselves? OpenAI’s security team recently open-sourced their version of a security chatbot, designed to politely (and occasionally firmly ask employees if they meant to perform a behavior that could lead to a breach or other negative outcome. This takes a high-fidelity approach with conversational flows, clarifying questions, sentiment analysis, and a conversation summary for the IR team to digest.

This chatbot is integrated after the SIEM, similar to the autonomous SOC analyst. It then has its own set of flows, databases, and application logic to enrich alerts for review by a human. AI chatbots are the ultimate example of Detection at Scale. As the company grows, the security team can scale its processes through code and automation, reducing costs and resulting in leaner teams. That’s the vision of developing this generation of security tooling.

Chatbot tooling will likely continue as standalone, one-off OSS projects or get folded into existing slack integrations with Enterprise security tooling, like SIEMs and other cloud-based detection platforms.

Log Summarization and Analysis

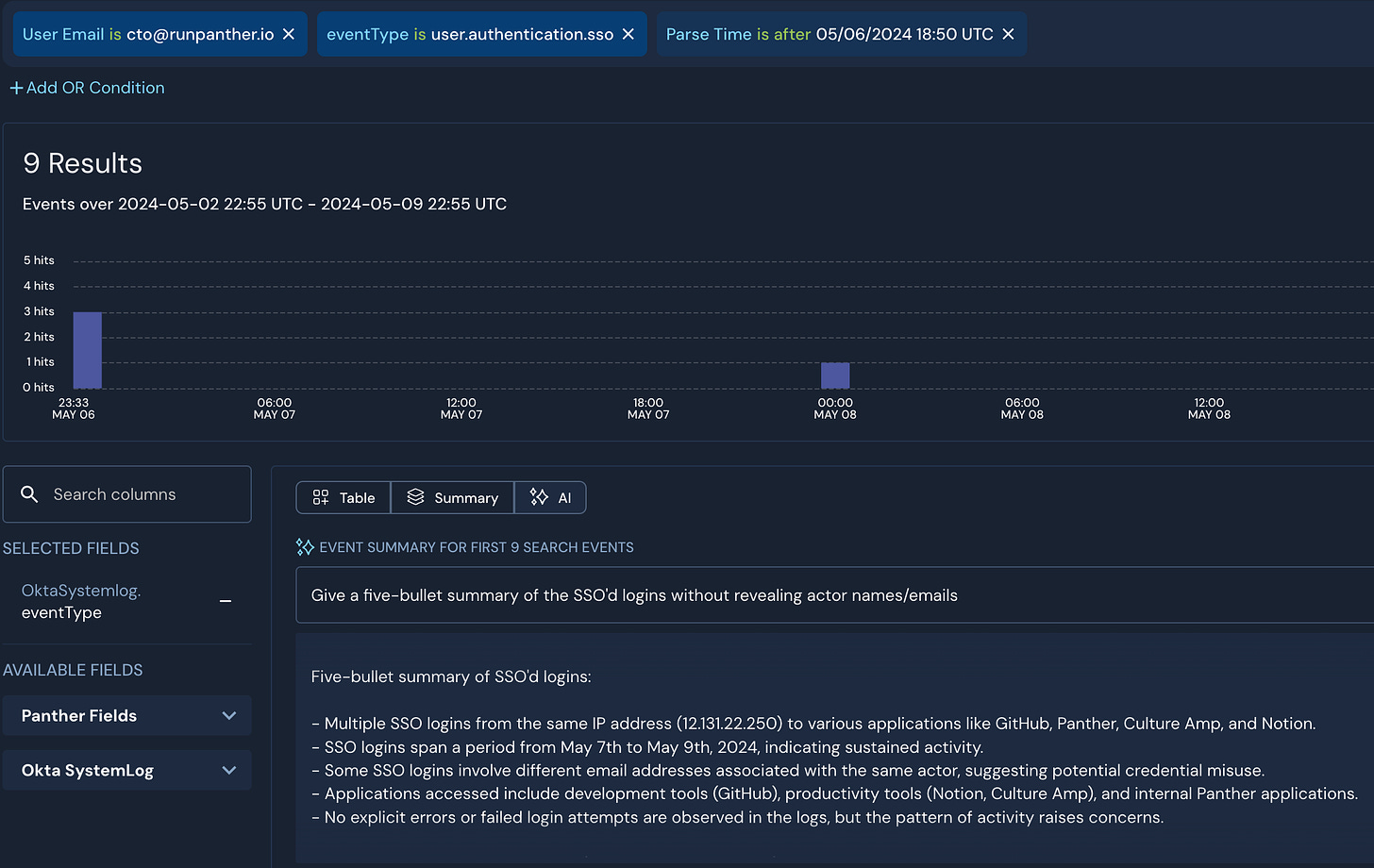

The SIEM is the third natural place to inject GenAI in Detection and Response, with data summarization, pattern matching, and natural language log analysis.

Logs are generated and consumed by machines but read by humans.

In migrating from monolithic SIEMs to a modern data lake architecture, log data has become beautifully formatted and column-based, the foundation for enabling data processing with code and AI to improve our comprehension at scale. To read more on the subject, check out our post that explores big data architectures and technologies.

GenAI is the new bridge for quickly extracting the most pertinent facts of logs and asking simplistic questions about a particular part of the log. This includes translating data like user agent strings, request parameters, and other in-depth data structures into a format humans can easily understand. The benefit is that we can decrease our time comprehending elements of logs and reduce our reliance on memorizing the entire audit log API from a particular application, thus saving a query or a Google search. It’s also useful for deriving interesting patterns and intersections between activities generated from correlations.

Each analyst on a security team sees the world through a particular lens, which can be a defining strength and come with inherent biases. While LLMs have a similar bias, they more often than not introduce a new perspective and build relationships that humans have a more difficult time drawing due to our limited attention and memory. This perspective can inadvertently train us to be better!

With the advent of RAG techniques, log summarization will become more contextual to an organization, technical stack, and security playbook. SIEM and similar tooling will inevitably adopt GenAI for summarization, NLP search, and other interesting techniques for log analysis.

Embracing the Wave

Are we at the top of the AI hype cycle? Likely not. It’s hard to deny that the developments in the GenAI ecosystem will continue making SecOps teams better, faster, and smarter than attackers. The use cases of autonomous triage, conversational chatbots, and log data summarization are just the beginning of what’s possible.

In the hierarchy of SecOps needs, great data access and quality are the base. These tools will only be successful with that foundation, coupled with the organizational context that comes from internal threat models, identities, and other practices.

Embrace the wave and start learning now! Thanks for reading.

Right I think it’s a mistake to conflate human-like chatbots with statistical forecasting and prediction models. And right now we’ve got hype and risk of overvaluation. Something SV loves. It will hopefully inspire some innovation in tedious work and I’m most excited about fraud, abuse, anomaly detection. A lot of areas really. Seems that innovation has stagnated lately and attackers are getting off too easy (lapsus$ breaching big names from a hotel room, various groups dropping zero days against random network edge appliances, we can’t seem to stop ransom or convince companies to acquire and deploy the solutions we’re selling). So it irks me that insiders are cringing over the conferences being oversaturated with AI content.

What TF else are we talking about friends? Don’t like the content at RSA? Welcome to the club friend. It always sucked.