Scaling Detection Writing with LLMs

Leveraging AI to Enhance Security Operations and Democratize Detection Engineering.

While nothing can replace the intuition of an experienced detection engineer, LLMs can streamline our thought processes, optimize the time needed to develop detection rules, and, most importantly, lower the barrier to entry for non-developers.

LLMs are (imagined as) the future operating system, augmenting existing software tooling. As language models advance in their capabilities, familiarizing ourselves with this technology will allow us to reap future benefits such as advanced reasoning—or even potentially the elusive artificial general intelligence (AGI).

In this blog post, we will use LLMs to research, plan, and develop a Python-based SIEM rule that monitors suspicious GitHub repo actions that could indicate account compromise. By learning these techniques, you can quickly scale your detection engineering function and generate any rule for any log type.

In case you missed the first post on LLM fundamentals, check that out to get a baseline about models, prompting techniques, and practical use cases for SecOps:

This blog also complements my recent appearance on “GitHub Start Up Wednesday.” The first part is about my journey to becoming a founder, and the second part is a demo.

Start with Why: Account Compromise

Threat modeling is powerful because it forces you to consider your risk factors on paper and prioritize what’s most important. Analytical tasks are great use cases for LLMs. Detection creation should always be intentional to secure the business versus enabling a rule “just because.” Coupling your threat model with the latest attack trends also helps refine and prioritize high-risk behaviors common today. Search-based LLM tooling like Perplexity.ai can help gather this inspiration. For this example, let’s research how attackers breach GitHub repositories containing our intellectual property:

These results are helpful and include references to reputable sources like SentinelOne’s blog or bleepingcomputer. Let’s focus on the Account Compromise use case, a generally valuable attack vector for most organizations to monitor:

Account compromise occurs when an unauthorized party gains control over a user's account credentials to act on behalf of the legitimate account owner.

This will act as “the why” behind our rule.

Deeper Research: Grok the Logs

Leveraging LLMs to interpret and explain SaaS log documentation can simplify the process of developing SIEM rules by providing a comprehensive understanding of the log schema and possible audited actions and avoiding alerts on benign activity.

In our example, we will apply Account Compromise to GitHub logs.

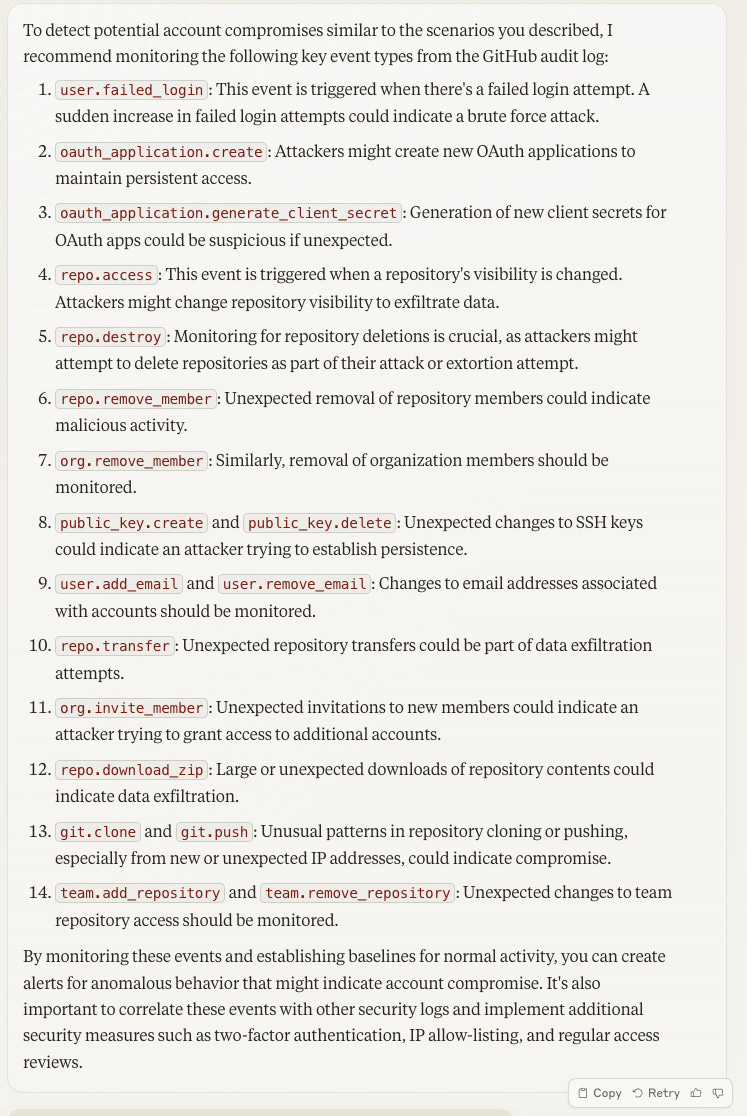

GitHub’s documentation is here, and in a nutshell, these logs monitor a wide range of activities across user accounts, repos, and orgs, including authentication, access changes, code changes, security settings, and administrative actions. Let’s download the page as a PDF and ask our LLM (Claude, in this case) to direct us to the proper exact event types to monitor:

“You're a cybersecurity SIEM, Detection Engineering, and Python expert. Help me answer questions about this attached PDF. If you don’t know the answer, do not make up information. Which GitHub Audit event types should I monitor to detect an account compromise similar to these prior breaches:

In 2020, Microsoft's GitHub account was compromised, resulting in the theft of 500GB of data from private repositories.

A recent campaign dubbed "Gitloker" has involved attackers compromising GitHub accounts, wiping repository contents, and attempting to extort victims."

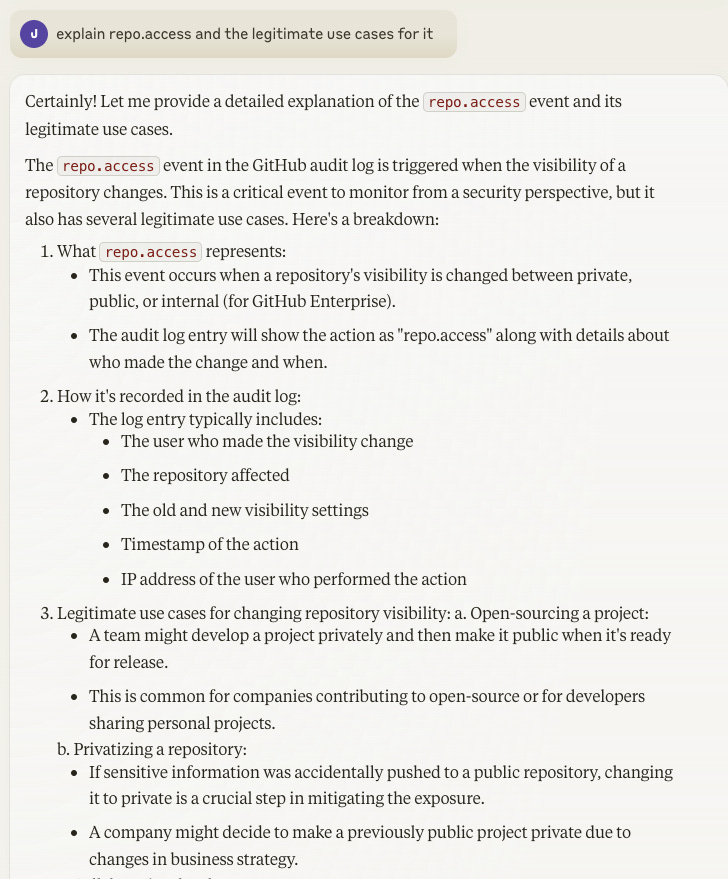

This is helpful context. We can also ask clarifying questions about the audit logs, such as related actions, possible parameters, or legitimate use cases for these events to occur (to avoid common false positives):

For our account compromise rule, let’s focus on repo actions and assume that the GitHub user account has already been compromised through some other means.

Prompting: Context, Examples, and Instruction

Writing a good rule requires knowledge about 1) attacker TTPs, 2) the SIEM detection rule language, and 3) the structure of your logs.

To write our GitHub account compromise rule, we’ll utilize prompting techniques like role assignments, context, examples, and clear instructions. Our goal is to capture the thought process of a detection engineer so we can scale and delegate more across the team. We can also encode our internal conventions and coding practices that are often nuanced internally.

Here’s the high-level prompt structure:

Introduction: Role assignment and goal

Example: What a good rule looks like

Event Schema: The structure of the logs we are analyzing

Required Methods and Attributes: Explain the Rule declaration

Instruction: What we want the LLM to do

Labeling the parts of our prompt improves the quality of responses. Remember, a clear prompt should be easily comprehendible by someone unfamiliar with the task! While we use Claude in these examples, these same principles apply to other platforms.

When LLM programming, it’s common practice to build a “system” prompt that defines the necessary context to perform the task (read more here). Think of this like the “middleware” between the user and the LLM. The prompt below acts as our system prompt until the “instruction.” This way, anyone interacting with this chatbot will specify a “user” query that’s more natural and less nuanced.

Let’s start with an introduction: Role assignment and goals.

Introduction:

You are an expert cybersecurity detection engineer tasked with analyzing log data to detect malicious events. Your goal is to create a Python class using the `pypanther` library that implements a rule for determining whether a given event is malicious. Follow the conventions outlined below to ensure compatibility with the Panther platform.Imagine role setting as setting the identity of the person completing the work. This provides nuance and expertise to our answers that would have otherwise been generic. Let’s then add an example of what a “good” rule looks like up-front:

Example Rule:

```python

from pypanther import LogType, Rule, RuleTest, Severity

class GithubRepoCreated(Rule):

id = "Github.Repo.Created-prototype"

display_name = "GitHub Repository Created"

log_types = [LogType.GITHUB_AUDIT]

tags = ["GitHub"]

default_severity = Severity.INFO

default_description = "Detects when a repository is created."

def rule(self, event):

return event.get("action") == "repo.create"

def title(self, event):

return f"Repository [{event.get('repo')}] created."

tests = [

RuleTest(

name="GitHub - Repo Created",

expected_result=True,

log={

"actor": "cat",

"action": "repo.create",

"created_at": 1621305118553,

"org": "my-org",

"p_log_type": "GitHub.Audit",

"repo": "my-org/my-repo",

},

),

RuleTest(

name="GitHub - Repo Archived",

expected_result=False,

log={

"actor": "cat",

"action": "repo.archived",

"created_at": 1621305118553,

"org": "my-org",

"p_log_type": "GitHub.Audit",

"repo": "my-org/my-repo",

},

),

]

```While using pypanther in this example, you could prompt examples using any SIEM rule language like Sigma, Elastic, or Chronicle. Putting the examples near the top of the prompt primes the model with the desired output format and allows it to formulate its response strategy earlier.

By specifying the log schema (from the docs), the LLM will understand what possible fields can be called in the rule() function:

Log Schema (with example values):

- action: team.create

- actor: octocat

- user: codertocat

- actor_location.country_code: US

- org: octo-org

- repo: octo-org/documentation

- created_at: 1429548104000 (Timestamp shows the time since Epoch with milliseconds.)

- data.email: octocat@nowhere.com

- data.hook_id: 245

- data.events: ["issues", "issue_comment", "pull_request", "pull_request_review_comment"]

- data.events_were: ["push", "pull_request", "issues"]

- data.target_login: octocat

- data.old_user: hubot

- data.team: octo-org/engineeringThe schema helps us write an accurate rule and better translate our requirements to code. We can also codify the nuance of the system so the LLM can better contextualize the example and understand our requirements. For example, the threshold attribute defines a minimum number of events before the rule fires:

Coding Conventions:

- Use simple return statements at the end of the rule method.

- Only create variables if they are re-used multiple times.

Required Methods:

- `rule(self, event: Dict[str, Any]) -> bool`: Determines if an alert should be sent. Returns `True` if the event matches the rule criteria, `False` otherwise.

- `title(self, event: Dict[str, Any]) -> str`: Returns a human-readable alert title.

Key Attributes:

- `id` (str): Unique identifier (e.g., "AWS.ALB.HighVol400s").

- `enabled` (bool): Rule activation status.

- `log_types` (List[LogType]): Applicable log types.

- `threshold` (int): Minimum matches to trigger an alert.

- `dedup_period_minutes` (int): Alert grouping time period.

- `reports` (Dict[str, List[str]]): Security framework mappings.

- `default_severity` (Severity): The risk level of the behavior.

- `default_runbook` (str): Steps to triage the alert and recommend next steps.

- `default_reference` (str): Reference to additional information about the alert.

- `default_description` (str): Brief explanation of the rule's purpose.

- `tests` (List[RuleTest]): A list of test cases for the rule methods.So far, the prompt is a high-level goal, role assignment, an example Rule, a breakdown of the audit log, and an explanation of what each method and attribute means in the rule. This is likely enough context. Remember, less is more with prompts. If we are too lengthy, such as several document PDFs and thousands of lines, we may get worse results.

Instruction:

Write a rule to detect when multiple sensitive GitHub actions are taken on repos: transferred, downloaded, or deleted. The rule should be set to a default high severity, only alert if at least two or more events occur within a 60-minute window, include a MITRE ATT&CK mapping to the "Impact" tactic, have at least three test cases, and compare against a list of known sensitive repos.Not. Bad.

class GithubSensitiveActions(Rule):

id = "Github.Sensitive.Actions"

display_name = "GitHub Sensitive Actions on Repos"

log_types = [LogType.GITHUB_AUDIT]

tags = ["GitHubDemo", "MITRE.Impact"]

default_severity = Severity.HIGH

default_description = "Detects when multiple sensitive actions are taken on repositories."

threshold = 2

dedup_period_minutes = 60

reports = {"MITRE": ["T1485", "T1531", "T1486"]} # Example MITRE ATT&CK mappings

sensitive_actions = {"repo.transferred", "repo.downloaded", "repo.deleted"}

sensitive_repos = {"sensitive-repo-1", "sensitive-repo-2", "sensitive-repo-3"}

def rule(self, event):

action = event.get("action")

repo = event.get("repo")

return action in self.sensitive_actions and repo in self.sensitive_repos

def title(self, event):

return f"Sensitive action [{event.get('action')}] on repository [{event.get('repo')}]"

def dedup(self, event):

return f"{event.get('repo')}-{event.get('action')}"

tests = [ # Only first two shown

RuleTest(

name="GitHub - Repo Transferred",

expected_result=True,

log={

"actor": "octocat",

"action": "repo.transferred",

"created_at": int((datetime.utcnow() - timedelta(minutes=30)).timestamp() * 1000),

"org": "octo-org",

"p_log_type": "GitHub.Audit",

"repo": "sensitive-repo-1",

},

),

RuleTest(

name="GitHub - Non-sensitive Repo Action",

expected_result=False,

log={

"actor": "octocat",

"action": "repo.transferred",

"created_at": int((datetime.utcnow() - timedelta(minutes=30)).timestamp() * 1000),

"org": "octo-org",

"p_log_type": "GitHub.Audit",

"repo": "non-sensitive-repo",

},

),

]

This rule worked on the first try, which is impressive! Notice that I didn’t instruct it to set the dedup function, but since it understands the methods and their purpose, it could build one correctly. This exemplifies the power of good prompts.

The beauty of LLMs is that your refinements can also be written using natural language! If it’s not perfect on the first try, that’s totally fine.

Where We Go From Here

LLMs are changing the way we work in a real way, and there are plenty of great use cases for security operations to make our lives easier.

As more security teams adopt detection as code, LLMs can be a (somewhat trusted) companion. It might look like a lot of work to achieve the resultant rule, but remember that LLM output scales non-linearly. In traditional software development, output is deterministic based on input and instructions, but LLMs can produce immense amounts of new content on command.

What we did:

Research the latest attack trends

Dove deep into a log source

Built a re-usable prompt for our SIEM of choice

Benefits:

Scaling your small team of detection engineers

Lower the barrier for detection as code and more capable analysis languages

Built a system prompt that can be re-used

We are still in the early innings, and there is a very likely future ahead where LLMs take the rote rule writing and tuning, and we redefine what a “detection engineer” means. It’s still day 1, and much to be excited about.