The Agentic SIEM

A practical guide to understand how AI agents will transform security operations from basic automation to intelligent analysis

Welcome to Detection at Scale—a weekly newsletter covering security monitoring, cloud infrastructure, the latest breaches, and more. Enjoy!

Two decades ago, security teams collected logs because auditors told them to. Then SIEMs emerged to help make sense of it all, but we quickly discovered they were better at creating alerts than helping us understand them. While detection engineering helped tame the noise, we still have a fundamental challenge: analyzing massive volumes of security signals at human speed.

Enter AI agents. Unlike the GenAI chatbots and tools that dominated cybersecurity headlines in 2024, agents are purpose-built assistants who can think, reason, and act within defined boundaries. They're not here to replace your SOC team but to create a new analytical layer that operates at machine speed with human-like reasoning.

The concept isn't entirely new. We've had automated playbooks and SOAR workflows for years, but there's a crucial difference: traditional automation follows predetermined paths, while agents can adapt, learn, and make informed decisions based on the broader context and history of your environment. AI agents will transform SIEM from a sophisticated log aggregator into an intelligent analytical platform—acting as analysts with impressive memories that can spot patterns across millions of events and never need coffee.

In this post, we'll explore how AI agents create the potential for a new analytical layer by maintaining strong memory across millions of relevant events, built-in safety mechanisms for reliable operation, and practical examples to transform investigations with dynamic, context-aware analysis.

Before diving in, let's be clear: AI agents aren't a magic solution to all your security challenges. As detection engineering requires careful thought and iteration, building effective security agents demands understanding their capabilities, limitations, and the right way to deploy them. The rewards, however, can be transformative for teams willing to embrace this new paradigm.

Beyond Traditional Automation

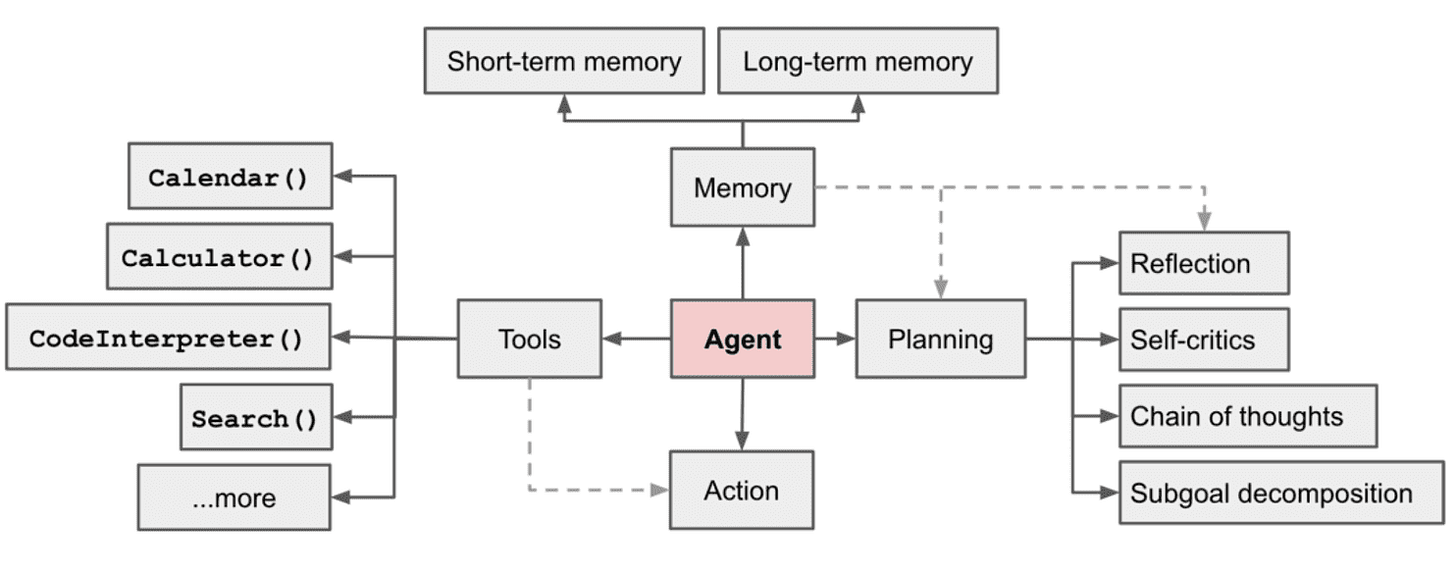

Let's clarify what " agent " means: a system that can understand its environment, make decisions, and take actions to achieve specific goals. Agents are specialized programs that perform analytical tasks traditionally reserved for humans. Like any security tool, their effectiveness must be measurable - alert accuracy or mean-time-to-resolution.

The most immediate and impactful application of AI agents in security operations is handling the constant stream of alerts and incidents that flood our teams. While SOAR and automation help with known and repetitive paths, AI agents can dynamically assess and take the most efficient route to resolution.

Consider the current workflow:

SIEM rules fire on suspicious activity

Alerts route to teams via Slack/email/ticketing

Enrichment resolves IPs or assets

The analyst performs several follow-up queries/pings

Decisions are made, documented, and closed

While this process works for dozens of alerts per day, it quickly breaks down at scale. Even with sound detection engineering and SOAR playbooks, we're still constrained by basic if-then logic: "If severity=high and source=production, then..." Actual triage and investigation rarely follow such linear paths.

This workflow also builds institutional knowledge in individual team members, which is often lost when people change jobs. The rigor of humans is responsible for codifying learning back into the system for future benefits.

<Soapbox>I have long held an opinion that SOAR, as a solution to validate SIEM alerts, is an anti-pattern. If you must perform the same actions every time, the SIEM should hold these capabilities internally, while the SOAR should be remediating. </Soapbox>

AI agents can change this paradigm and work like experienced analysts, leveraging the appropriate suite of available tools through APIs, from threat intelligence platforms to asset management systems and identity providers, and dynamically fetching answers versus following prescribed pathways. This is especially helpful when moving past simple situational awareness and into discovery. Predicting the exact queries you’ll need to run is often difficult since attackers could take many paths through your environment.

Agents can also be used to capture specialized internal knowledge that represents the patterns of your business and security team. If an agent accomplishes its tasks, the pathway can be stored for future use and expedited analysis.

The Power of Context

Let's examine how agents handle actual security investigations. Take a suspicious login alert: rather than following a static checklist, the agent builds a dynamic investigation path. It might start with user context, but upon discovering a recent role change, it pivots to analyze peer group behavior patterns. If it finds an unusual application installation on their device, it branches into endpoint history analysis. This fluid approach mirrors how skilled analysts think – following leads rather than procedures.

The key difference isn't just more intelligent automation – it's how agents understand and retain context. While traditional security tools process each alert in isolation, agents maintain a living understanding of your environment’s history. Security incidents unfold as connected stories across systems and time, creating a context web that would overwhelm human memory. Agents excel at maintaining this broad perspective, tracking behavior patterns across cloud services, correlating system interactions, and maintaining perfect recall of historical incidents and their resolutions.

This contextual awareness transforms security operations by considering multiple critical dimensions:

Asset criticality and data sensitivity

Organizational structure and business processes

Temporal patterns and seasonal variations

Recent environmental changes like system updates or policy modifications

Current threat landscape and attack patterns

Regulatory requirements and compliance obligations

Agents act as copilots, not replacements. They compile comprehensive summaries, suggest next steps based on historical patterns, and surface relevant past incidents. Most importantly, they maintain state across team handoffs, ensuring critical context isn't lost during long-running incidents.

But with this power comes an important question: how do we keep agents operating reliably within defined boundaries?

Agent Safety and Reliability

When SOAR and cloud security tooling emerged, security teams hesitated to implement automated actions in production environments. The fear of causing downtime, data loss, or business instability led to careful, measured approaches to automation. We must apply these same hard-learned lessons to AI agents.

While AI agents offer powerful capabilities, they require similarly careful management through robust reliability engineering practices. The foundation of agent safety lies in explicit boundaries. Every agent's action and conclusion must have clear reasoning, data sources, and confidence. When uncertainty rises, agents must default to gathering more information rather than making low-confidence assessments. This transparency isn't just about building trust – it's about creating verifiable and auditable decision paths.

Critical security operations should always place a human in the loop. Agents should recognize the boundaries of their capabilities and gracefully transition control to human analysts when encountering novel patterns, high-risk scenarios, or situations requiring judgment beyond their training. This is synonymous with the less experienced analyst tapping the 15-year veteran for support during an incident.

Rate limiting, resource constraints, and failure modes must all be explicitly defined in agent tool definitions, such as closing alerts. An agent investigating an incident should never trigger additional work through its actions that could result in manual cleanup efforts. Every agent deployment needs clear fallback mechanisms and circuit breakers to maintain utility.

The Future SOC Operating Model

This partnership between human analysts and AI agents represents a fundamental shift in how security operations will evolve—not through wholesale automation but through intelligent augmentation of human capabilities. By handling routine analytical tasks, agents free up human expertise for the more demanding security challenges requiring novel solutions.

The most successful cybersecurity tools will be those that carefully balance agent capabilities with human oversight, always focusing on improving security outcomes rather than replacing human judgment. As agents become more sophisticated, the role of security analysts will evolve toward higher-level strategy, threat hunting, and incident response—areas where human intuition and creativity remain irreplaceable. Success will come from starting narrow and gradually expanding scope as reliability is proven.

The future of security operations isn't about humans versus machines. It's about building effective partnerships combining the best of both: human intuition and creativity, machine speed, and pattern recognition. That's the true promise of the agentic SIEM.

Great Article - what are the key SIEM players doing in this space? What has been achieved and the roadmap? Probably, your next article can cover it.