5 SIEM Capabilities for Detection Engineering

Features to look for and tips for evaluating them.

Welcome to Detection at Scale! A weekly newsletter is for practitioners and leaders responsible for scaling their security operations. It covers various topics, from Detection Engineering to Cloud Infrastructure to the latest vulnerabilities and breaches. Enjoy!

Detection Engineering is emerging as the future of lean and efficient security operations teams. However, this is not a typical career path as most practitioners have evolved from security analysts or incident responders to becoming competent in cloud infrastructure, software development, and data engineering. CISOs and leaders agree that Detection Engineering is the future of security operations and have been setting expectations that everything must be declared “as-code,” automated, measured, and optimized. In Season 1 of the Detection at Scale podcast, Nir Rothenberg from Rapyd described his mentality for building out a Detection Engineering function (starting at 11:00):

“I only want a team that can not ONLY respond to alerts, but can improve the capabilities behind those alerts. Ultimately, it leads to much less fatigue.”

Detection Engineering begins with “the why” behind alerts and leads to “the how” of responding to those alerts. In Unraveling SIEM Correlation Techniques, we covered these processes behind modeling attacker techniques to improve alert quality.

As security teams adopt a Detection Engineering approach, vendors and platforms must adapt to support them. In this blog post, we’ll explore the ideal five capabilities that support detection engineering: A data pipeline, detection as code, an API, a scalable query engine, and BYOC deployments. If you enjoy this post, please share it with someone who would find it helpful or leave a comment!

Data Pipeline

Data is the new gold, and “all organizations are secretly becoming AI companies.” A well-designed data pipeline is key to high-quality data and effective security monitoring:

A data pipeline is a series of processing steps to prepare enterprise data for analysis, and includes various technologies to verify, summarize, and find patterns in data to inform business decisions. Raw data is useless; it must be moved, sorted, filtered, reformatted, and analyzed for business intelligence. - Amazon

Data pipelines manage all the steps between a raw audit log and a structured, compressed, filtered, enriched, column-based row in a data warehouse, ready for intelligence and insights. Many organizations have existing pipelines that power business intelligence and internal applications. However, security has lagged due to the history of monolithic SIEMs that previously operated like log dumping grounds and were siphoned off only for security teams. Moving to the cloud has forced these mature data engineering patterns, and structured data is a key enabler for scaling Detection Engineering.

When evaluating data pipeline capabilities, look for:

Integrations: Support for key log sources, popular security tools, or interfacing with existing warehouse datasets. If the platform also can pull from user-defined APIs, it's even better for the long-term sustainability of your organization's needs.

Parsing and data formats: Parsers translate raw logs into structured logs, and the resultant format affects downstream rules and queries. Typical parsers include JSON, CSV, TSV (tab-separated), regex, and grok. Pay close attention to the chosen resultant data model, whether ECS or OCSF, as it affects your downstream queries and rules. Robust parsing capabilities are often required to ingest custom internal datasets based on user-defined formats.

Filtering and transformation: As we covered in filtering, sifting out junk logs is important for high data quality. The SIEM should be able to drop logs based on regular expressions or another search language. Additionally, on-the-fly data transformations can insert tags, create derived fields, or help unnest valuable data hiding deep in the log.

Routing: Not all data must stay “hot” in the SIEM and may only need to be used for compliance or large-scale historical incident response. Look for routing capabilities that support low-cost data storage tiers and multiplexing data to multiple backends.

While an entire blog post could be written on each of these capabilities, we’ll keep it brief!

Scalable Analytics

Now that we have cleaned, formatted, and structured data, we need somewhere to put it and process it for threat detection. Two key elements powering analytics are a data repository in the form of a “security data lake” and a query engine to load and process that data to find hits and anomalies.

Security data lakes are increasing in popularity because of the need to develop detection as code (next section), running large-scale historical analytics, such as “Who logged into machine XYZ on March 2nd, 2020?” Traditional SIEMs were hindered by their tight coupling between storage and compute, making it difficult to achieve Petabyte-scale warehouses and deeper insights into your security data.

Data lakes also provide control and ownership over your data for applications outside the SIEM, such as advanced data science or interfacing with other internal teams. This is also emerging as a common pattern with the increasing popularity of open table formats like Apache Iceberg and Delta Lake, breaking free of proprietary data formats that can only be read from a single engine.

The query engine is the enabler for those analytics and comes in many forms. When evaluating query engines, look for:

Language: Is the query language simple and easy for your team?

Capabilities: Which analytical functions are available for processing data? Do they allow you to find the type of activity relevant to your threat model? Can you define custom functions?

Enabling AI: Does the query engine or platform support training models, anomaly detection, or other capabilities to future-proof your detection frameworks?

Scale: Can the query engine auto-scale based on your need to look back months or years during an investigation?

Data Formats: Does the engine read from multiple data formats like Parquet?

Now that we have prepped data, we can analyze it.

Detection as Code

Most engineers write code… But in 2024, AI is responsible for 50% of it. Structured data is the fuel for a great analytics program, and Detection as Code (DaC) is the framework to drive security insights:

Detection as Code is a set of principles that use code and automation to implement and manage threat detection capabilities. - Building a DaC pipeline by David French

DaC (Detection as Code) is the security version of IaC (Infrastructure as Code) but for rules. Previously, SIEM rules were manually configured and tuned, often leading to attribution issues, a lack of change management and collaboration, and bloated queries that nobody understood. Detection as Code is backed by software engineering best practices to declare our detection logic sustainably while building automation from the beginning.

Today, DaC systems come in all shapes, sizes, and languages, from formats like YAML/TOML to full programming languages like Python to signature languages like YARA-L. As long as a structured format exists and can be checked into version control systems (VCS), it is safe to call it DaC!

When evaluating the capabilities of a DaC framework, look for:

A Test Suite: Declaring as code is only as powerful as the tests that back it up. This often requires inserting sample logs that fire alerts or test logic in multiple parts of the rule. Tests will help in simple attack simulation but, more importantly, protect against regressions as rules evolve.

CI/CD Integration: Gitops automates the staging and deployment of new rules into the SIEM directly from VCS or according to that organization's best practices. Combining an API endpoint with boilerplate CICD scripts helps streamline automated deployments.

Built-In Content: No team wants to build their corpus of rules fully from scratch. Instead, the SIEM should come pre-baked with example rules, libraries, and other patterns for tailoring to your organization's needs.

Let’s examine a commonly used example rule of “CloudTrail Logging Disabled”: Elastic, Sigma, Chronicle, and Panther. Each rule has the same outcome, with slight data source and DaC framework variances.

[rule]

author = ["Elastic"]

description = "Identifies the deletion of an AWS log trail. An adversary may delete trails in an attempt to evade defenses."

false_positives = [

"""

Trail deletions may be made by a system or network administrator. Verify whether the user identity, user agent,

and/or hostname should be making changes in your environment. Trail deletions by unfamiliar users or hosts should be

investigated. If known behavior is causing false positives, it can be exempted from the rule.

""",

]

from = "now-60m"

index = ["filebeat-*", "logs-aws*"]

interval = "10m"

language = "kuery"

license = "Elastic License v2"

name = "AWS CloudTrail Log Deleted"

risk_score = 47

rule_id = "7024e2a0-315d-4334-bb1a-441c593e16ab"

severity = "medium"

tags = ["Domain: Cloud", "Data Source: AWS", "Data Source: Amazon Web Services", "Use Case: Log Auditing", "Resources: Investigation Guide", "Tactic: Defense Evasion"]

timestamp_override = "event.ingested"

type = "query"

query = '''

event.dataset:aws.cloudtrail and event.provider:cloudtrail.amazonaws.com and event.action:DeleteTrail and event.outcome:success

'''

title: AWS CloudTrail Important Change

description: Detects disabling, deleting and updating of a Trail

author: vitaliy0x1

date: 2020/01/21

modified: 2022/10/09

tags:

- attack.defense_evasion

- attack.t1562.001

logsource:

product: aws

service: cloudtrail

detection:

selection_source:

eventSource: cloudtrail.amazonaws.com

eventName:

- StopLogging

- UpdateTrail

- DeleteTrail

condition: selection_source

falsepositives:

- Valid change in a Trail

level: medium

rule aws_cloudtrail_logging_tampered {

meta:

author = "Google Cloud Security"

description = "Detects when CloudTrail logging is updated, stopped or deleted."

mitre_attack_tactic = "Defense Evasion"

mitre_attack_technique = "Impair Defenses: Disable Cloud Logs"

mitre_attack_url = "<https://attack.mitre.org/techniques/T1562/008/>"

mitre_attack_version = "v13.1"

type = "Alert"

data_source = "AWS CloudTrail"

platform = "AWS"

severity = "High"

priority = "High"

events:

$cloudtrail.metadata.vendor_name = "AMAZON"

$cloudtrail.metadata.product_name = "AWS CloudTrail"

(

$cloudtrail.metadata.product_event_type = "UpdateTrail" or

$cloudtrail.metadata.product_event_type = "StopLogging" or

$cloudtrail.metadata.product_event_type = "DeleteTrail" or

$cloudtrail.metadata.product_event_type = "PutEventSelectors"

)

$cloudtrail.security_result.action = "ALLOW"

$cloudtrail.principal.user.user_display_name = $user_id

match:

$user_id over 1h

condition:

$cloudtrail

}

from panther_base_helpers import aws_rule_context, deep_get

from panther_default import aws_cloudtrail_success

SECURITY_CONFIG_ACTIONS = {

"DeleteAccountPublicAccessBlock",

"DeleteDeliveryChannel",

"DeleteDetector",

"DeleteFlowLogs",

"DeleteRule",

"DeleteTrail",

"DisableEbsEncryptionByDefault",

"DisableRule",

"StopConfigurationRecorder",

"StopLogging",

}

def rule(event):

if not aws_cloudtrail_success(event):

return False

if event.get("eventName") == "UpdateDetector":

return not deep_get(event, "requestParameters", "enable", default=True)

return event.get("eventName") in SECURITY_CONFIG_ACTIONS

These are just samples of what’s possible with Detection as Code and how the various languages and frameworks allow creativity, simplicity, or modularity. Customers using this content can also make the necessary changes to fit their organization's needs.

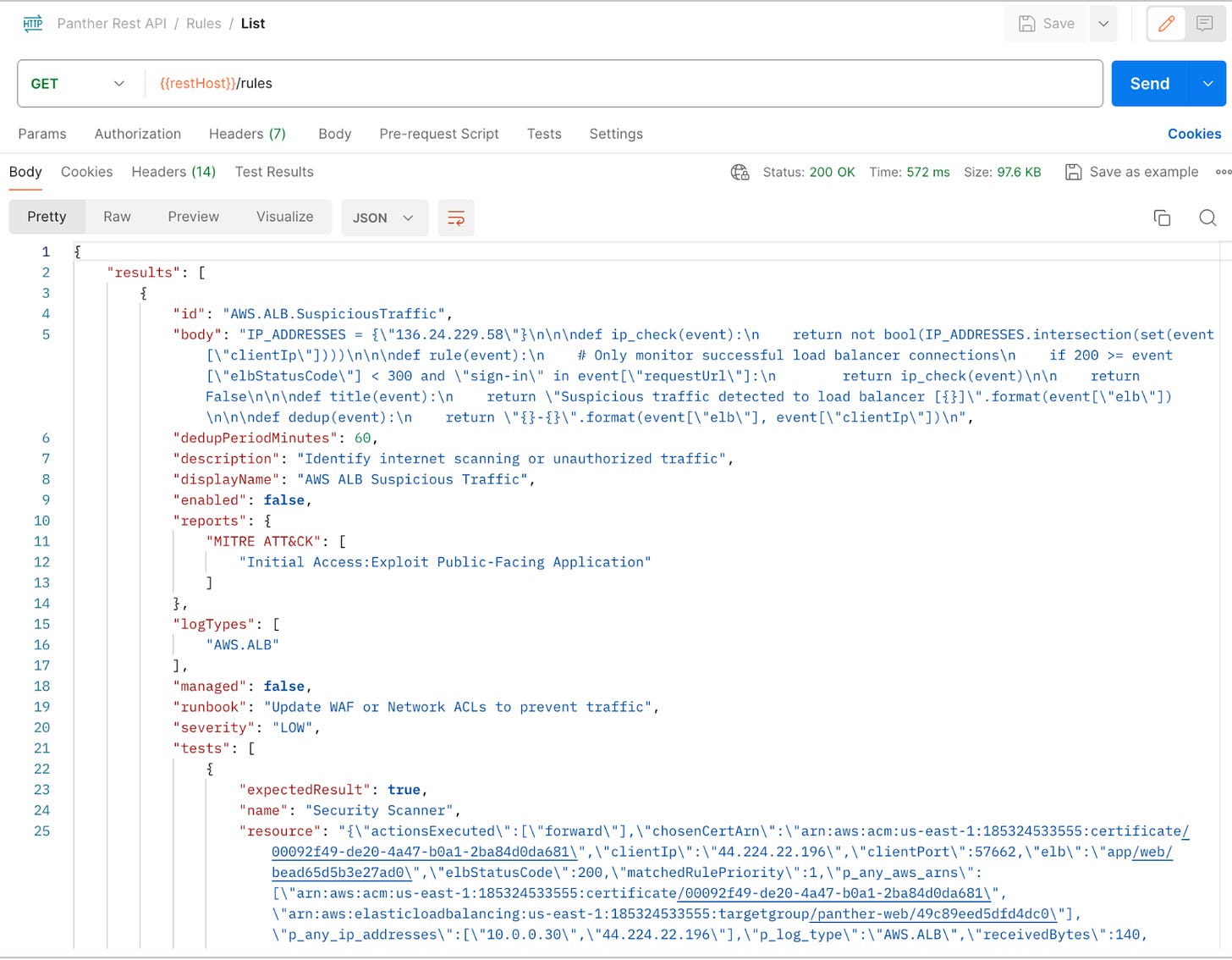

API

Most day-to-day operations as a Detection Engineer revolve around building code, automation, and improving existing monitoring capabilities. An API is a key component of enabling automated workflows by providing an interface to the underlying capabilities of that platform, and modern SIEMs should have one.

REST APIs provide CRUD operations across core SIEM workflows: Data Pipeline, Rules, Alerts, and Search. Detection Engineers can utilize APIs to update rules programmatically, issue pivots and queries, close multiple alerts, or onboard new log sources. The API is also a key enabler to connect SOARs and external automation into the SIEM, and providers should provide an SDK, CLI, or set of endpoints accessible through tokens.

When evaluating an API, look for:

Key Rotation: Can API keys be easily rotated for better security?

Permissions: Can we restrict the API key’s permissions following Least Privilege?

Endpoints: Which operations are possible via the API? What can I automate on my own?

Auditing: Do actions taken with the API key get logged in the SIEM? We need (meta) audit logs to ensure no abuse happens using these keys.

BYOC Deployment

The goal of the SIEM is to be the defacto data store for all security data, and organizations want cost-effective ways to process and own the data at scale.

BYOC (bring your own cloud) is re-emerging, where infrastructure software runs inside the customer’s cloud account. While SaaS is an effective model for serving applications, the costs associated with scaling a large-scale SIEM or data-intensive platform can be cost-prohibitive. Additionally, the cost of moving data between a source account and a vendor’s cloud can challenge the pricing model or rack up egress fees (although cloud providers are evolving here).

In addition to the cost concerns, organizations also have privacy requirements or operate in highly regulated industries. SIEMs that allow the customer to own the data protect against accidental exposure.

When deploying SIEMs into your cloud, look for Infrastructure as Code capabilities to make management predictable and stable over the long term.

What’s Next?

Every security team wants better alerts, and that starts with great data. The future of security will center on AI, and the detection landscape is evolving to require data and engineering practices. The capabilities covered in this post are crucial for supporting modern detection engineering, and we expect to see these become more standard over time to up-level the score against attackers.

If you enjoyed this post, be sure to subscribe! Thank you!

Additional Recommended Reading